Two forms of glue hold the euro together. First, the economic costs of break-up would be great. The minute investors heard that Greece was seriously contemplating reintroducing the drachma with the purpose of depreciating it against the euro, or against a “new Deutsche mark,” they would wire all their money to Frankfurt. Greece would experience the mother of all banking crises. The “new Deutsche mark” would then shoot through the roof, destroying Germany’s export industry.In the conclusion, he says "I argued that it is the roach motel of currencies. Like the Hotel California of the song: you can check in, but you can’t check out." To be precise, that's true of the Roach Motel (see here, if you don't know what that's all about), but, according to the Eagles, you can actually check out of the Hotel California, though you can never leave (hmm... sounds kind of like "Brexit"...).

More generally, those predicting, or advocating, the euro’s demise tend to underestimate the technical difficulties of reintroducing national currencies.

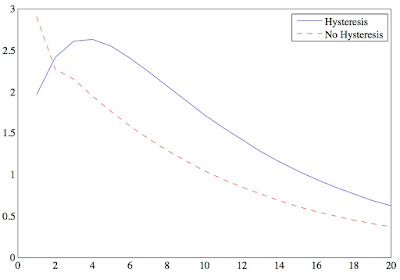

In any case, the fact it hangs together because eurozone members feel trapped by the costs of exit is hardly an affirmative case for the single currency. In Greece's case, its hard to believe that the costs of exit really would have been higher than the costs of staying; this FT Alphablog post by Matthew Klein pointed out this figure from the IMF's Article IV report:

The IMF also released a self-evaluation of its Greece program, which Charles Wyplosz analyses in a VoxEU column. See also: this Martin Sandbu column and this article by Landon Thomas. Matt O'Brien's write-up of research by House, Tesar and Proebsting of the impact of austerity in Europe is also relevant.

The fact that the eurozone rolls on with no sign that a depression in one of its smaller constituent economies is enough to bring about a fundamental change is disturbing. It wouldn't be able to ignore an election of Marine LePen as President of France - Gavyn Davies considers the consequences of that.

Update: Cecchetti and Schoenholtz also had a good post on the implications of a LePen win.